AI Primer 03 : Emergence, Latent Space, and the Semantic World

Why AI Appears Smarter Than It Actually Is

(Ephemeral Release · Read Before Removal)

Most discussions about AI stop at tools, prompts, and productivity.

What actually determines whether AI compounds value over time is none of that.

It is whether you hold the correct mental model of what this system is and what it is not.

Many people, after prolonged use of AI, experience a subtle but powerful illusion:

AI seems to understand me.

AI seems to reason.

AI seems to grasp the world better than I do.

This essay exists to dismantle that illusion.

Not because it is false, but because it is dangerously convincing.

I. Emergence Is Not Awakening, It Is Structural Visibility

“Emergence” is often described as something mystical, even romanticized as a form of intelligence awakening.

That framing is wrong.

A more precise definition is this:

Emergence occurs when system scale, complexity, and interaction density cross a threshold, making previously implicit patterns observable.

Nothing new is born.

No consciousness appears.

No intent emerges.

What changes is visibility.

In large language models, the combination of:

parameter scale

compression

long context windows

and constraint density

causes latent structures to display continuity, coherence, and behavior that resembles reasoning.

Emergence does not create intelligence.

It reveals structure that was already there.

II. Latent Space Is Not Knowledge, It Is Relational Geometry

A foundational misconception is that LLMs “store knowledge”.

They do not.

A more accurate description is:

Latent space is a high-dimensional geometry of relationships between linguistic and conceptual elements.

It does not contain truth.

It contains structure.

You can think of it as an abstract map where:

similar concepts cluster

frequently co-occurring ideas form pathways

interchangeable expressions overlap

incompatible narratives diverge

When an LLM produces an answer, it is not recalling facts or executing logic.

It is:

navigating latent space along high-probability trajectories toward regions that resemble “good answers” under the given context.

This explains why AI can:

hallucinate confidently

produce internally consistent but externally false reasoning

collapse when confronted with real-world constraints

The optimization target is linguistic plausibility, not reality correctness.

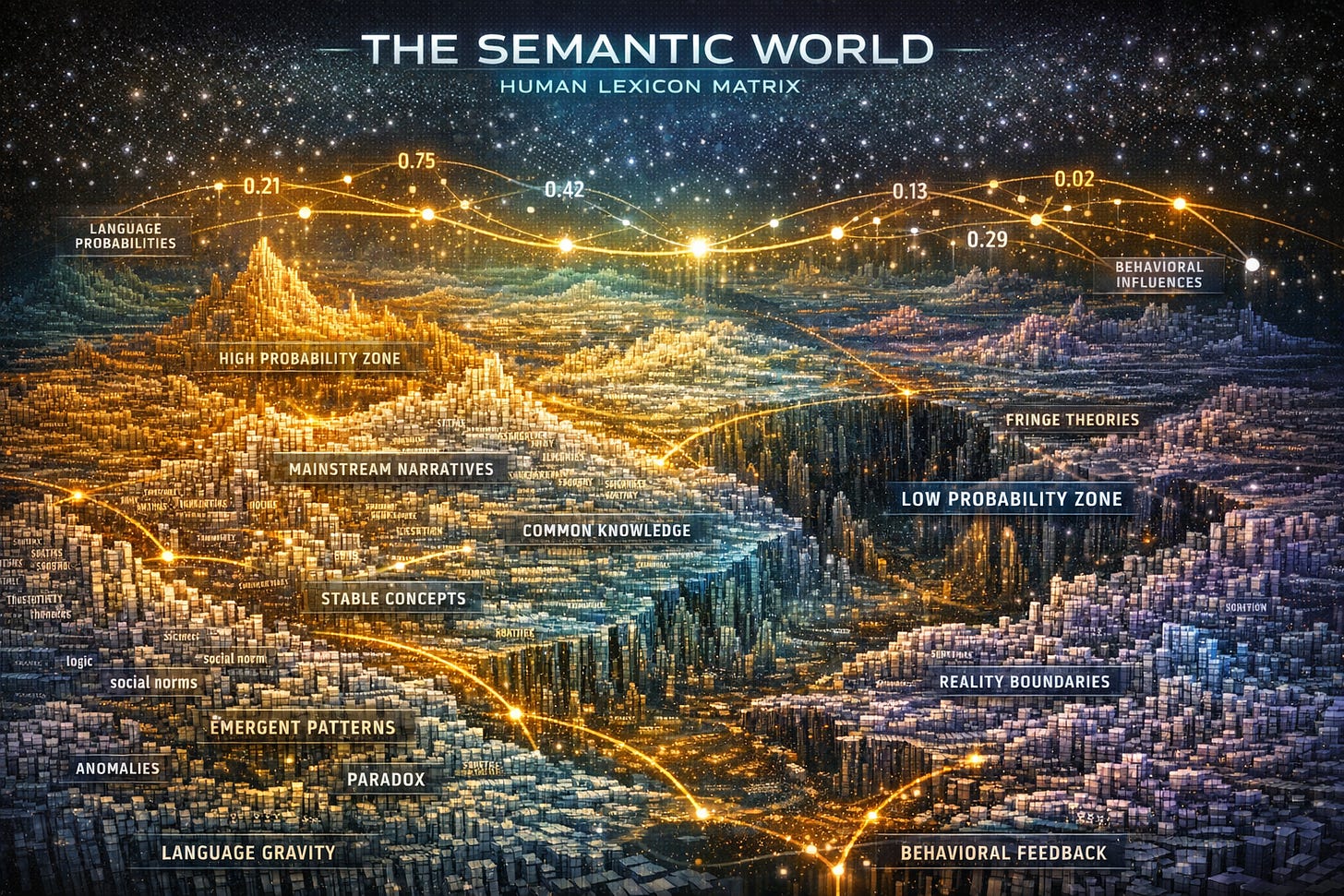

III. Introducing the Semantic World: A Higher-Order Space

This brings us to the core concept: Semantic World.

If latent space is the internal structural geometry of a model,

then Semantic World is a broader, more dangerous layer:

The Semantic World is the high-level conceptual space constructed by human language, narratives, symbols, and explanatory frameworks.

It does not belong solely to the model.

It does not belong solely to text.

It exists in:

economic paradigms

market narratives

political discourse

media framing

social consensus boundaries

and individual cognitive models

These are not reality itself.

They are:

reality after it has been named, interpreted, compressed, and structured by language.

The true power of LLMs is not factual recall.

It is their ability to navigate, reorganize, and compress the Semantic World at high speed.

What AI gives you is rarely truth.

What it gives you is:

the smoothest, most coherent, most readable path through semantic space that resembles truth.

IV. Why AI Feels Like It Is Reasoning

AI outputs feel like reasoning because they exhibit:

continuity

structural completeness

confident tone

contextual consistency

narrative closure

The human brain is wired to interpret these signals as:

understanding, intention, intelligence.

In reality, what often happens is simpler:

The model finds a highly fluent path through the Semantic World, and the human mind misidentifies fluency as reasoning.

This is not AI awakening.

It is human pattern recognition completing the picture.

V. The Most Dangerous Mistake: Confusing Compression With Insight

The core capability of LLMs is compression.

They compress:

massive redundancy

repeated explanations

fragmented discourse

into clean, elegant outputs.

This is extremely useful.

But it is critical to distinguish:

compression ≠ insight

coherence ≠ correctness

elegance ≠ applicability

True insight carries cost.

It emerges from:

contradiction

failure

loss

friction

real-world penalty functions

None of these exist inside the Semantic World.

They only exist outside, in reality.

VI. Why Advanced Users Feel AI “Understands Them Better Over Time”

Many users claim that AI “learns them”.

This is incorrect.

What actually happens is:

the user becomes better at providing constraints and steering probability distributions.

As objectives sharpen and boundaries become explicit, outputs migrate from:

the center of the distribution (generic templates) to:

the low-probability tails (high-density, specialized outputs)

AI does not learn you.

You learn how to cut paths through the Semantic World.

VII. Why Nonlinear Domains Feel More “Alive”

In domains such as:

macro analysis

strategy

systems design

narrative construction

there is no single correct solution.

These domains tolerate:

ambiguity

approximation

competing frameworks

This aligns perfectly with latent-space navigation.

In contrast, in mathematics or safety-critical systems, approximation is fatal.

The difference is not intelligence.

It is error tolerance.

VIII. The Correct Mental Model

AI is not:

a thinker

an oracle

a judge of truth

It is best understood as:

a semantic-space navigator, a structural compressor, and a candidate-path generator.

Truth convergence happens only in one place:

the real-world feedback loop.

Markets, execution, physics, user behavior, PnL, failure.

AI explores possibilities.

Reality collapses them.

Conclusion: The Real Battlefield Is Your Semantic World

The dividing line in the AI era is not model access or technical skill.

It is whether you understand and control:

your own Semantic World

your conceptual boundaries

your narrative structures

your constraint systems

your feedback loops

If you do not, AI will anesthetize you with beautifully coherent explanations while silently detaching you from reality.

AI is not dangerous because it lies.

It is dangerous because:

it can construct an extremely comfortable world

in which reality’s penalty function is temporarily forgotten.

Premium Note

The logical continuation is practical, not philosophical:

AI Primer 04: Designing AI Loops That Cannot Self-Deceive

— From Semantic World to Reality Loop

At that point, this stops being a series of essays.

It becomes a personal AI operating system.

And not everyone should run it.